ODM 0.9.8 Adds Multispectral, 16bit TIFFs Support and Moar!

WebODM introduced support for plant health algorithms about a month ago. It was no secret that we concurrently started work to support TIFFs file inputs and multispectral cameras, both features that have been highly requested.

Today we are excited to announce the release of ODM 0.9.8!

TIFF Support

Up until now ODM was able to process only JPG files. With this release we added support for processing TIFF files, both 8bit and 16bit. TIFF is a popular format especially with multispectral and thermal cameras.

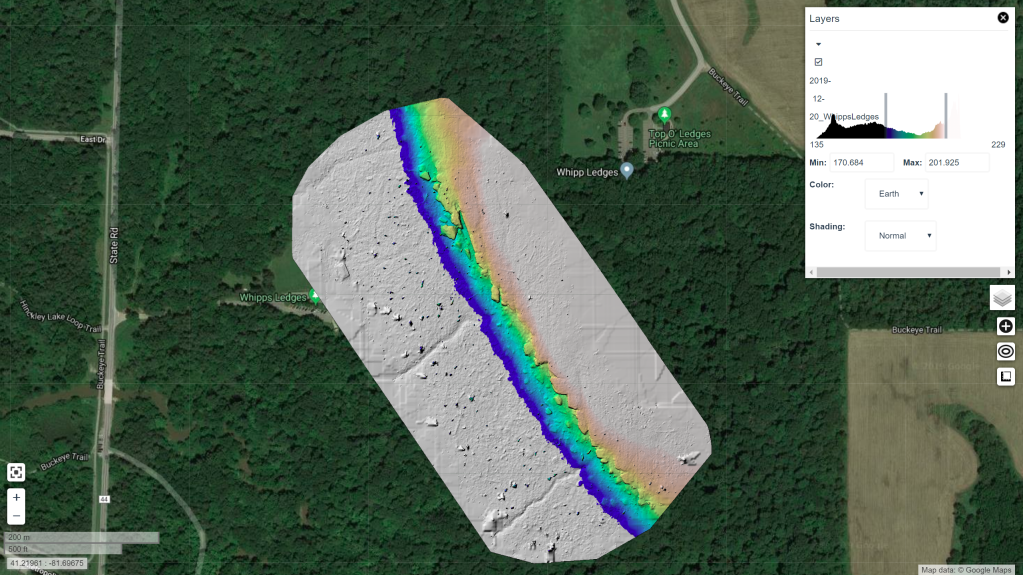

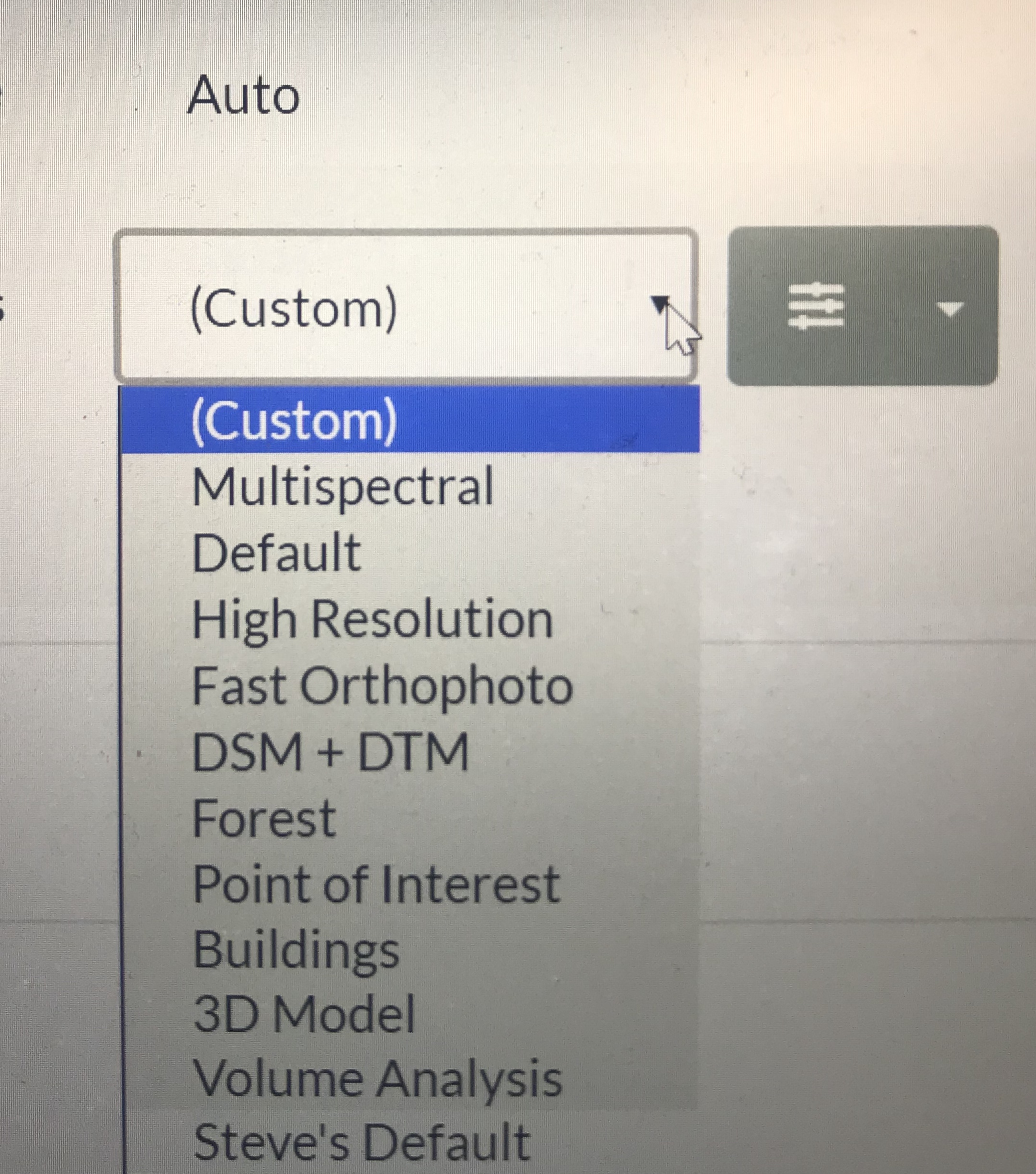

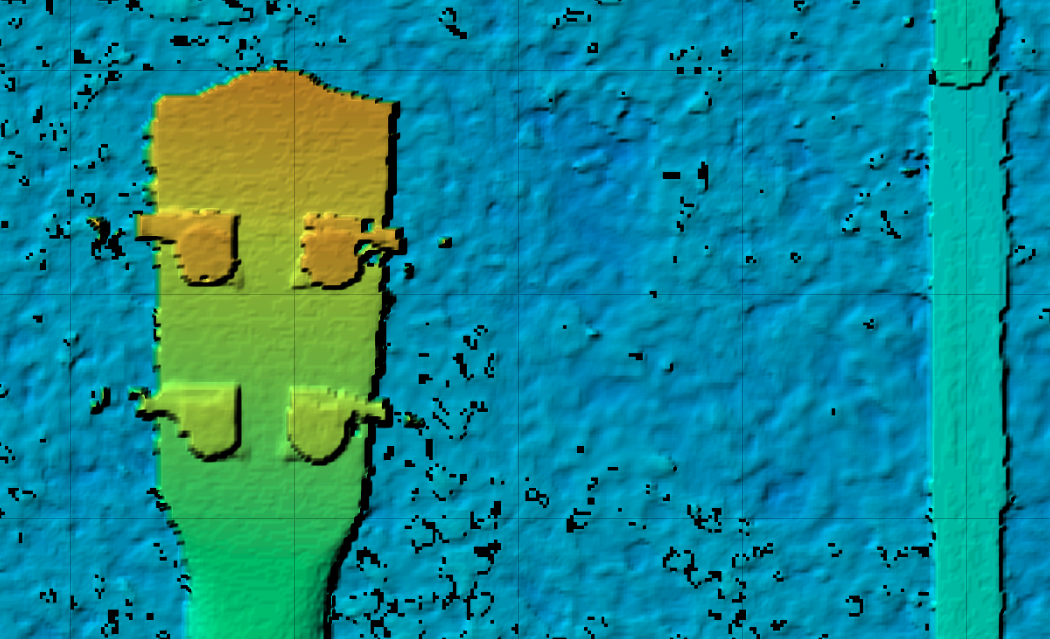

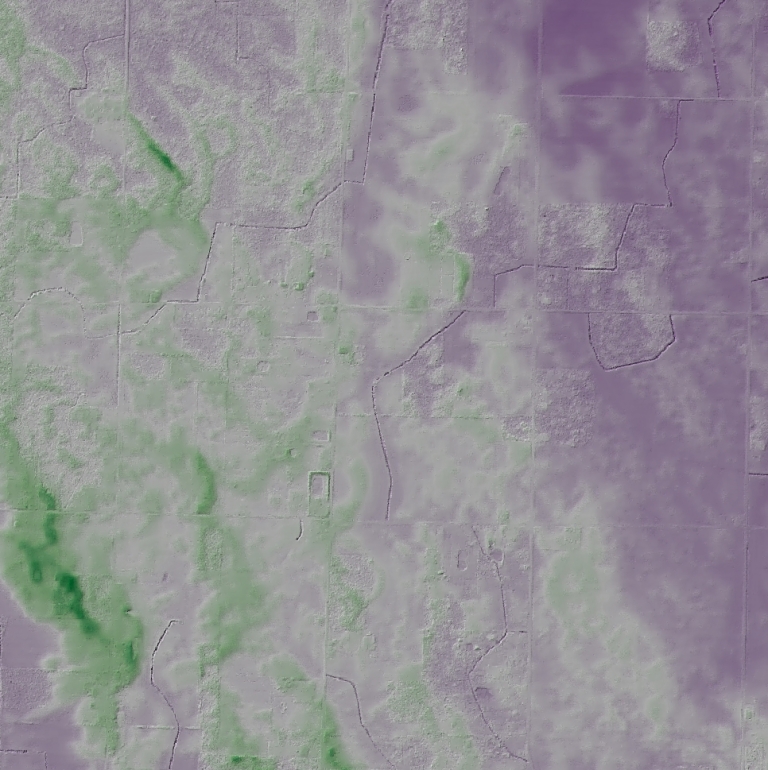

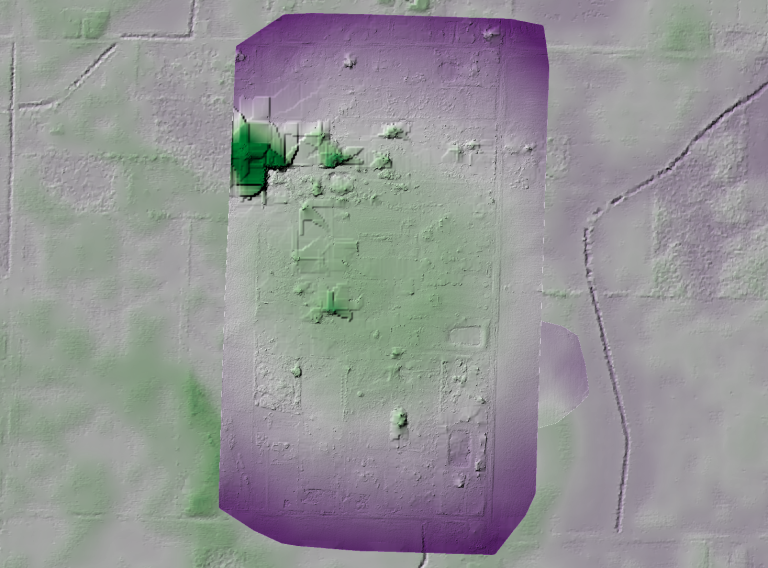

Multispectral Support (Experimental)

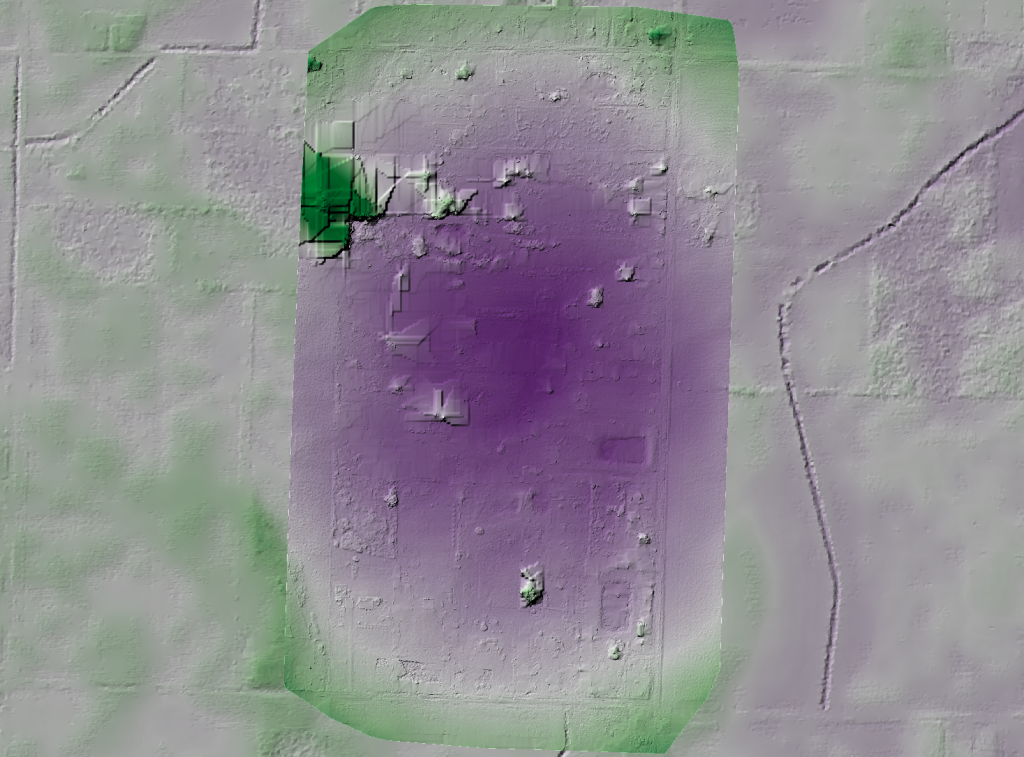

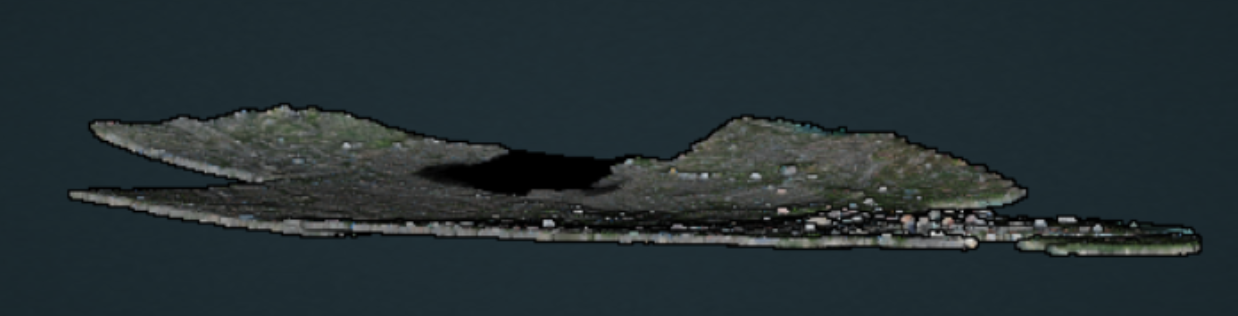

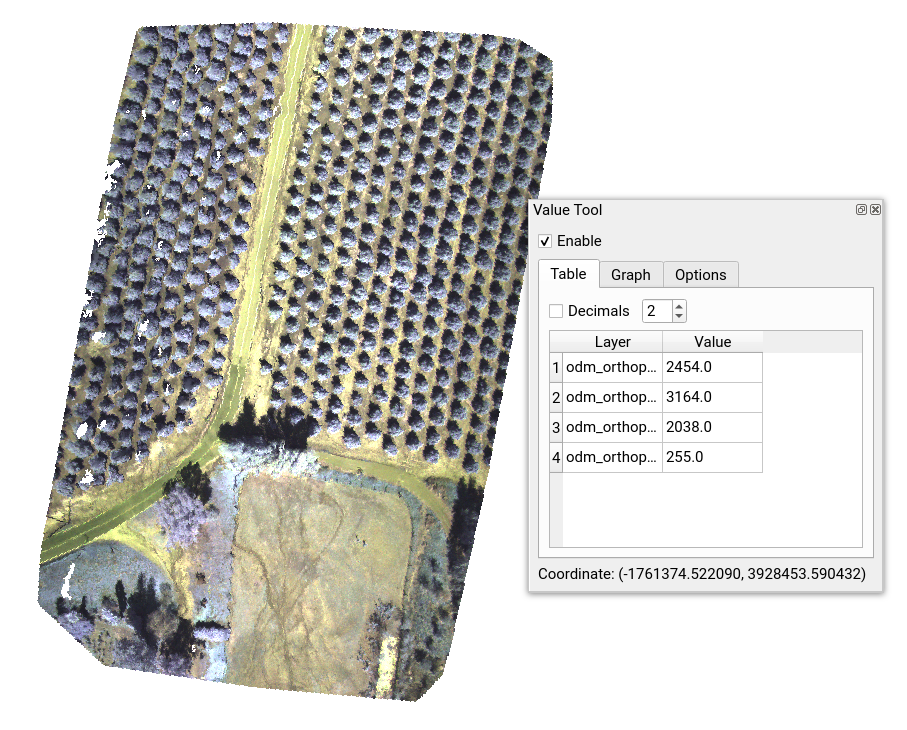

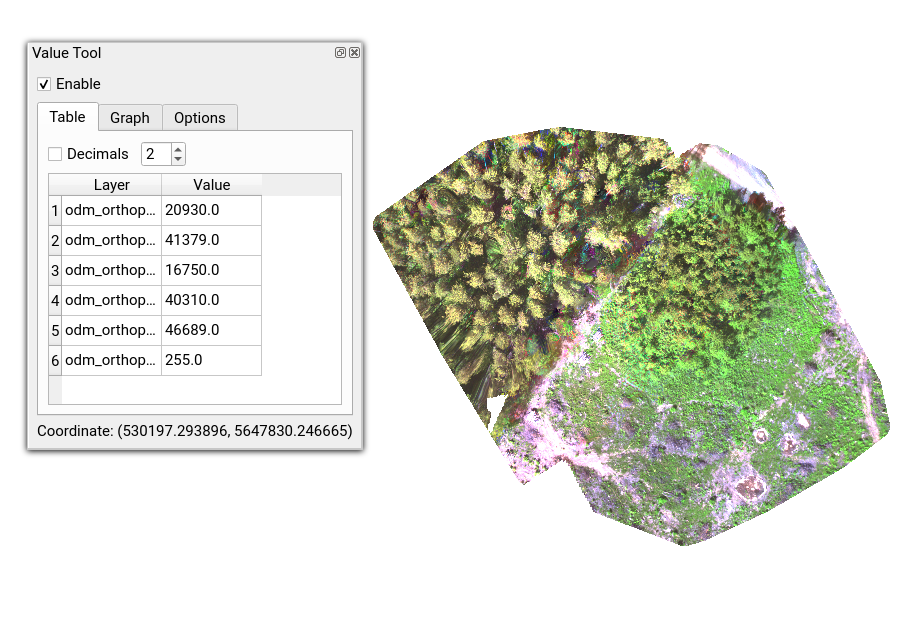

We have added the ability to process multispectral images (for example those captured with a Parrot Sequoia, MicaSense Altum or RedEdge). We have obtained some promising preliminary results. When provided with N camera bands, ODM will generate an N band orthophoto (in the proper bit resolution, up to 16).

We have not added support for spectral calibration targets, which we plan to add in the near future and we’re currently looking to add support for more cameras. The task of identifying different bands is different for each camera vendor and we’ll need to add support for more cameras with time. We hope the community will start to process some datasets and help us improve multispectral support (share your datasets?)

We recommend to pass the –texturing-skip-global-seam-leveling option when processing thermal/multispectral datasets. Global seam leveling will attempt to normalize the values distribution across orthophotos, which works well for making pretty RGB images, but will affect measured values in thermal/multispectral settings.

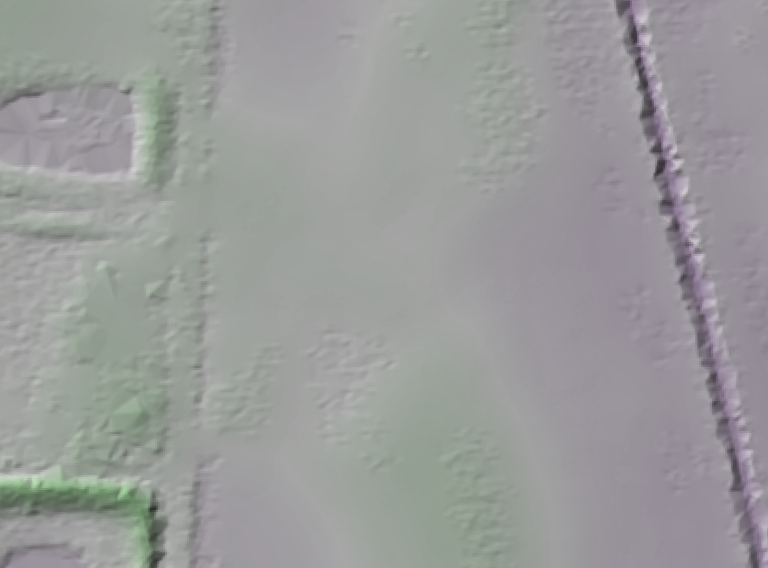

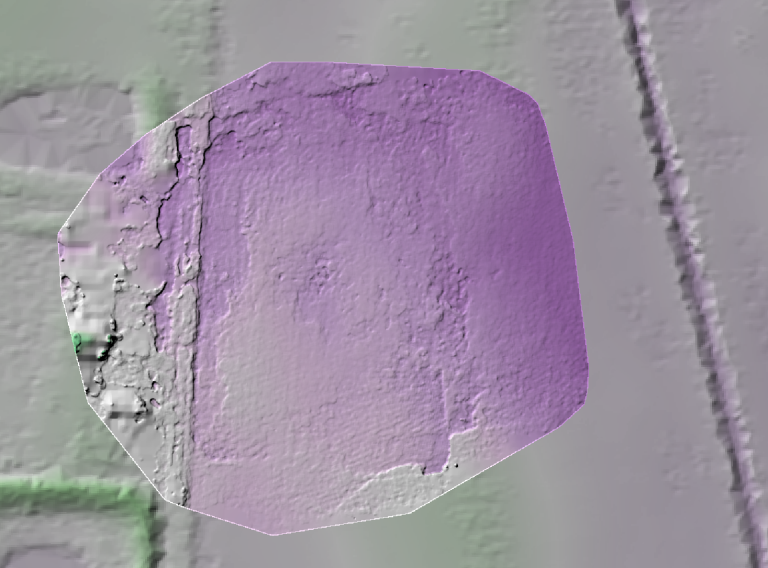

Split-merge Improvements

We rewrote from scratch the orthophoto cutline blending algorithm to merge orthophotos, a bottleneck that was causing processing to take longer than necessary in the last stage of the pipeline. The new algorithm is faster and much more memory efficient. We also sped up by a factor of 30x the time it takes to merge point clouds from submodels, as well as reducing memory usage drastically.

OpenSfM/MVE Updates

We brought the latest version of OpenSfM in this update, which delivers up to 1.6x faster image matching than before.

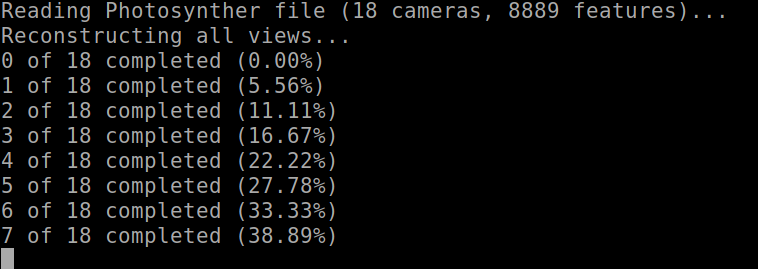

MVE has also been (finally) modified to report progress on the status of computations. You’ll finally know if the program is “stuck” or not.

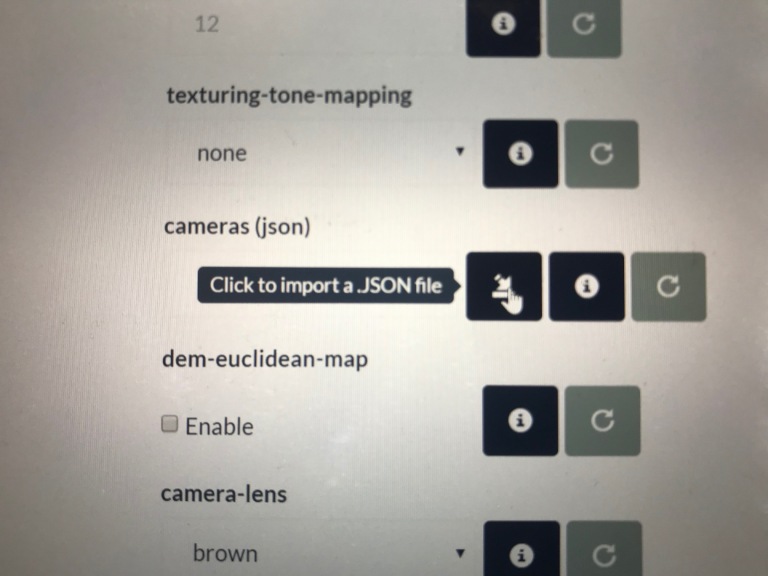

Improved Brown-Conrady Camera Model

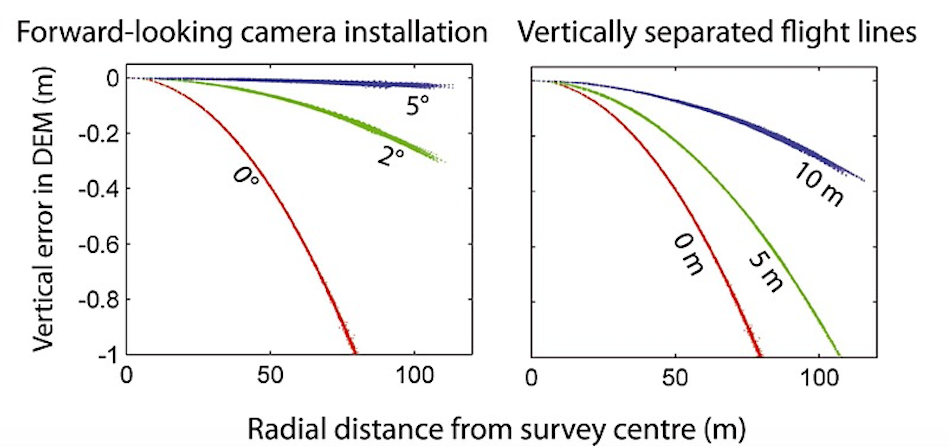

We made modifications to the camera model used to compute camera poses and points. Up until now the default in ODM has been to use a simplified perspective camera model. The community has been testing the usage of the brown-conrady model for a while, with great results. The original brown-conrady model however uses two parameters for specifying focal length, which are unfortunately not accurately supported by the texturing and dense reconstruction stages of the pipeline (a single focal length is used by those stages). We’ve approximated the brown-conrady computation by averaging the two focal lengths, but could we do better? Yes!

We modified the brown-conrady model to use a single focal length, bringing the model to full support for all stages of the pipeline and set it as the default camera model. We expect this will improve the quality of results for all outputs. Preliminary tests confirm this.

Easier To Contribute to ODM

If you are on Linux, it’s now easier than ever to make a change to ODM. A new script setups a development environment for you inside a docker container.

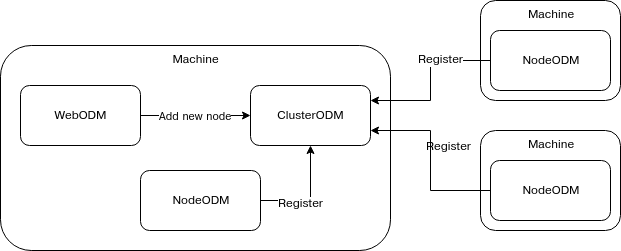

And One More Thing: NodeODM Changes

NodeODM now exposes a task list API endpoint. This is a feature that has been requested a lot and allows people to view the tasks running on a particular node. If you send a task to NodeODM via WebODM (or CloudODM or PyODM, or any other client), if you open the NodeODM interface you will be able to monitor and manage the task. This is also implemented in ClusterODM.

We have also replaced the .zip compression method in NodeODM to be faster, more memory efficient.

What do you think of the new changes? Try them out and report any bug.